衆議院

できてない

- 日付抽出(和暦・漢数字)

- 提出者抽出

import time from urllib.parse import urljoin import requests from bs4 import BeautifulSoup from tqdm import tqdm_notebook headers = { "User-Agent": "Mozilla/5.0 (Windows NT 10.0; WOW64; Trident/7.0; rv:11.0) like Gecko", } def get_info(url): time.sleep(3) r = requests.get(url, headers=headers) r.raise_for_status() soup = BeautifulSoup(r.content, "html5lib") info = soup.find("div", {"id": "mainlayout"}) info.find("div", {"id": "breadcrumb"}).extract() text = info.get_text("\n", strip=True) return text if __name__ == "__main__": result = [] for i in tqdm_notebook(range(1, 202)): if i < 148: link = f"http://www.shugiin.go.jp/internet/itdb_shitsumona.nsf/html/shitsumon/kaiji{i:03}_l.htm" else: link = f"http://www.shugiin.go.jp/internet/itdb_shitsumon.nsf/html/shitsumon/kaiji{i}_l.htm" time.sleep(3) r = requests.get(link, headers=headers) r.raise_for_status() soup = BeautifulSoup(r.content, "html5lib") trs = soup.select("table#shitsumontable tr") for tr in tqdm_notebook(trs): tds = tr.select("td") data = {} if tds: data["kaiji"] = i data["number"] = int(tds[0].get_text(strip=True)) data["kenmei"] = tds[1].get_text(strip=True) data["status"] = tds[2].get_text(strip=True) data["klink"] = urljoin(link, tds[3].a.get("href")) if tds[3].a else None data["slink"] = urljoin(link, tds[4].a.get("href")) if tds[4].a else None data["slink_pdf"] = ( urljoin(link, tds[5].a.get("href")) if tds[5].a else None ) data["tlink"] = urljoin(link, tds[6].a.get("href")) if tds[6].a else None data["tlink_pdf"] = ( urljoin(link, tds[7].a.get("href")) if tds[7].a else None ) data["stext"] = get_info(data["slink"]) if data["slink"] else None data["ttext"] = get_info(data["tlink"]) if data["tlink"] else None result.append(data)

import pandas as pd df = pd.DataFrame(result) df.to_csv("syugin.csv", encoding="utf_8_sig")

参議院

できてない

- 日付抽出(和暦・漢数字)

import time from urllib.parse import urljoin import requests from bs4 import BeautifulSoup from tqdm import tqdm_notebook headers = { "User-Agent": "Mozilla/5.0 (Windows NT 10.0; WOW64; Trident/7.0; rv:11.0) like Gecko", } def get_info(url): time.sleep(3) r = requests.get(url, headers=headers) r.raise_for_status() soup = BeautifulSoup(r.content, "html5lib") info = soup.select_one("div#ContentsBox > table") text = info.get_text("\n", strip=True) return text if __name__ == "__main__": result = [] for i in tqdm_notebook(range(1, 202)): link = f"https://www.sangiin.go.jp/japanese/joho1/kousei/syuisyo/{i:03}/syuisyo.htm" time.sleep(3) r = requests.get(link, headers=headers) r.raise_for_status() soup = BeautifulSoup(r.content, "html5lib") trs = soup.select("table.list_c tr") tmp = [j for j in zip(trs[0::3], trs[1::3], trs[2::3])] for tr in tqdm_notebook(tmp): data = {} data["kaiji"] = i data["kenmei"] = tr[0].a.get_text(strip=True) data["number"] = int(tr[1].td.get_text(strip=True)) data["name"] = tr[1].th.find_next_sibling("td").get_text(strip=True) slink = tr[1].find("a", string="質問本文(html)") data["slink"] = urljoin(link, slink.get("href")) if slink else None tlink = tr[1].find("a", string="答弁本文(html)") data["tlink"] = urljoin(link, tlink.get("href")) if tlink else None slink_pdf = tr[2].find("a", string="質問本文(PDF)") data["slink_pdf"] = ( urljoin(link, slink_pdf.get("href")) if slink_pdf else None ) tlink_pdf = tr[2].find("a", string="答弁本文(PDF)") data["tlink_pdf"] = ( urljoin(link, tlink_pdf.get("href")) if tlink_pdf else None ) data["stext"] = get_info(data["slink"]) if data["slink"] else None data["ttext"] = get_info(data["tlink"]) if data["tlink"] else None result.append(data)

import pandas as pd df = pd.DataFrame(result) df.to_csv("sangin.csv", encoding="utf_8_sig")

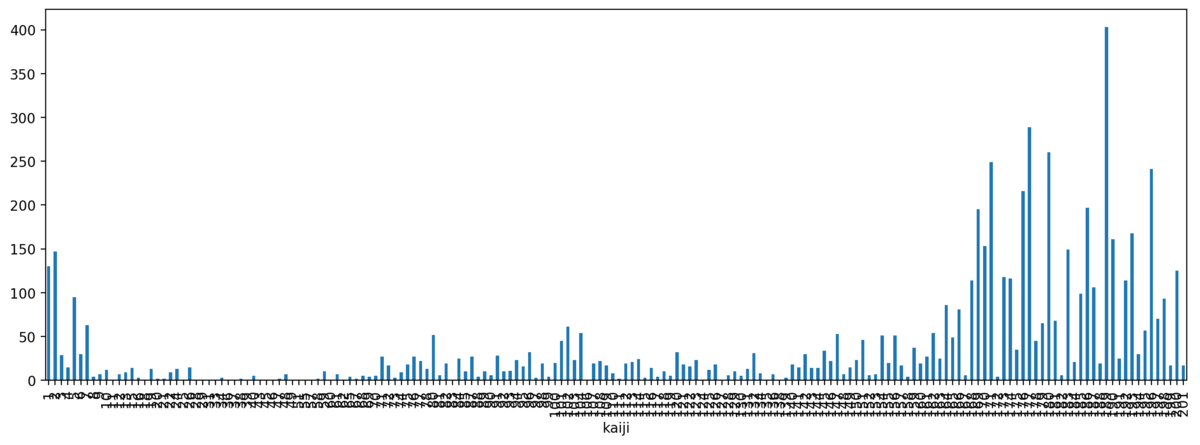

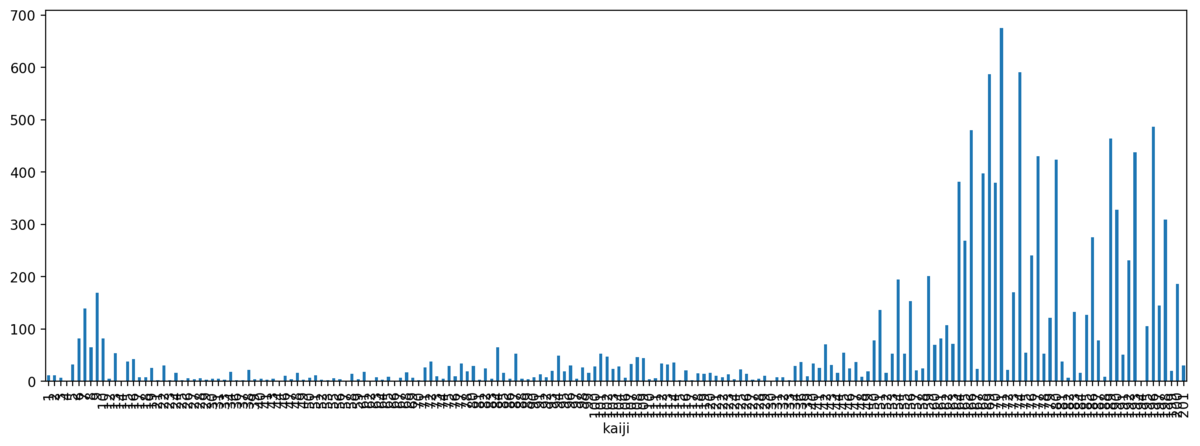

s1 = df.groupby(["kaiji"])["tlink"].count() s1.plot.bar(figsize=(15,5))

衆議院

参議院