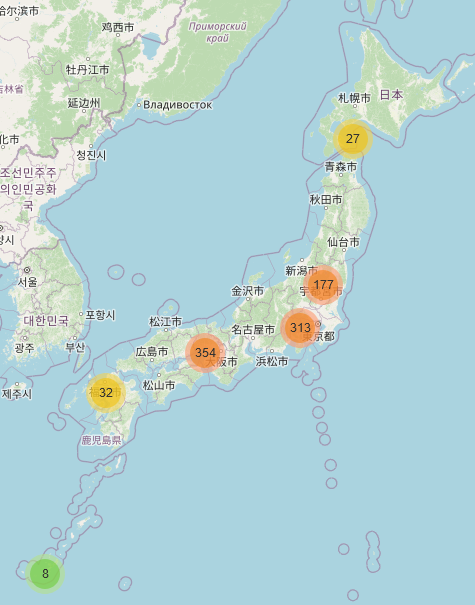

上の記事の頃より東北・関東が増えてる

地図から位置情報をスクレイピング

CSV結果

スクレイピング

import requests from bs4 import BeautifulSoup import pathlib import time import urllib.parse headers = { "User-Agent": "Mozilla/5.0 (Windows NT 10.0; WOW64; Trident/7.0; rv:11.0) like Gecko" } def fetch_soup(url, parser="html.parser"): r = requests.get(url, headers=headers) r.raise_for_status() soup = BeautifulSoup(r.content, parser) return soup def fetch_shop(url): print(url) shop = {} soup = fetch_soup(url) link = soup.select_one("div.imap > iframe").get("src") qs = urllib.parse.urlparse(link).query qs_d = urllib.parse.parse_qs(qs) if qs_d.get("ll"): lat, lng= map(float, qs_d["ll"][0].split(",")) shop["緯度"] = lat shop["経度"] = lng shop["店舗名"] = " ".join( [i.get_text(strip=True) for i in soup.select("h3.shop_title p.left")] ) shop["郵便番号"] = soup.select_one("div.dboxleft").get_text(strip=True) shop["住所"] = soup.select_one("div.dboxright").contents[1].string shop["電話番号"] = ( soup.select_one('div.shop_info > div > img[alt="TEL"]') .parent.find_next_sibling("div") .get_text(strip=True) ) shop["営業時間"] = ( soup.select_one('div#openday > div > img[alt="営業時間"]') .parent.find_next_sibling("div") .get_text(strip=True) ) return shop shop_list = [] for i in range(1, 48): url = f"https://www.gyomusuper.jp/shop/list.php?pref_id={i}" soup = fetch_soup(url) for a in soup.select("div.list_box_l > div > div > a"): link = urllib.parse.urljoin(url, a.get("href")) shop = fetch_shop(link) shop_list.append(shop) time.sleep(2) time.sleep(5)

CSV変換

import pandas as pd df = pd.DataFrame(shop_list) df.info() df[df.isnull().any(axis=1)] df.to_csv("gyoumu.csv", encoding="utf_8_sig")

<class 'pandas.core.frame.DataFrame'> RangeIndex: 911 entries, 0 to 910 Data columns (total 7 columns): # Column Non-Null Count Dtype --- ------ -------------- ----- 0 緯度 895 non-null float64 1 経度 895 non-null float64 2 店舗名 911 non-null object 3 郵便番号 911 non-null object 4 住所 911 non-null object 5 電話番号 911 non-null object 6 営業時間 911 non-null object dtypes: float64(2), object(5) memory usage: 49.9+ KB

位置情報が欠損は全店舗911店舗中16件

地図

import folium from folium import plugins map = folium.Map(location=[34.06604300, 132.99765800], zoom_start=6) marker_cluster = plugins.MarkerCluster().add_to(map) for i, r in df.iterrows(): folium.Marker( location=[r["緯度"], r["経度"]], popup=folium.Popup(r["店舗名"], max_width=300), ).add_to(marker_cluster) map